diff --git a/modules/deepface-master/Dockerfile b/modules/deepface-master/Dockerfile

new file mode 100644

index 000000000..911df26df

--- /dev/null

+++ b/modules/deepface-master/Dockerfile

@@ -0,0 +1,54 @@

+# base image

+FROM python:3.8

+LABEL org.opencontainers.image.source https://github.com/serengil/deepface

+

+# -----------------------------------

+# create required folder

+RUN mkdir /app

+RUN mkdir /app/deepface

+

+# -----------------------------------

+# switch to application directory

+WORKDIR /app

+

+# -----------------------------------

+# update image os

+RUN apt-get update

+RUN apt-get install ffmpeg libsm6 libxext6 -y

+

+# -----------------------------------

+# Copy required files from repo into image

+COPY ./deepface /app/deepface

+COPY ./api/app.py /app/

+COPY ./api/api.py /app/

+COPY ./api/routes.py /app/

+COPY ./api/service.py /app/

+COPY ./requirements.txt /app/

+COPY ./setup.py /app/

+COPY ./README.md /app/

+

+# -----------------------------------

+# if you plan to use a GPU, you should install the 'tensorflow-gpu' package

+# RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org tensorflow-gpu

+

+# -----------------------------------

+# install deepface from pypi release (might be out-of-date)

+# RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org deepface

+# -----------------------------------

+# install deepface from source code (always up-to-date)

+RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org -e .

+

+# -----------------------------------

+# some packages are optional in deepface. activate if your task depends on one.

+# RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org cmake==3.24.1.1

+# RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org dlib==19.20.0

+# RUN pip install --trusted-host pypi.org --trusted-host pypi.python.org --trusted-host=files.pythonhosted.org lightgbm==2.3.1

+

+# -----------------------------------

+# environment variables

+ENV PYTHONUNBUFFERED=1

+

+# -----------------------------------

+# run the app (re-configure port if necessary)

+EXPOSE 5000

+CMD ["gunicorn", "--workers=1", "--timeout=3600", "--bind=0.0.0.0:5000", "app:create_app()"]

diff --git a/modules/deepface-master/LICENSE b/modules/deepface-master/LICENSE

new file mode 100644

index 000000000..2b0f9fbb7

--- /dev/null

+++ b/modules/deepface-master/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2019 Sefik Ilkin Serengil

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/modules/deepface-master/Makefile b/modules/deepface-master/Makefile

new file mode 100644

index 000000000..af58c7f7d

--- /dev/null

+++ b/modules/deepface-master/Makefile

@@ -0,0 +1,5 @@

+test:

+ cd tests && python -m pytest . -s --disable-warnings

+

+lint:

+ python -m pylint deepface/ --fail-under=10

\ No newline at end of file

diff --git a/modules/deepface-master/README.md b/modules/deepface-master/README.md

new file mode 100644

index 000000000..a4549c3f0

--- /dev/null

+++ b/modules/deepface-master/README.md

@@ -0,0 +1,375 @@

+# deepface

+

+

+

+[](https://pepy.tech/project/deepface)

+[](https://anaconda.org/conda-forge/deepface)

+[](https://github.com/serengil/deepface/stargazers)

+[](https://github.com/serengil/deepface/blob/master/LICENSE)

+[](https://github.com/serengil/deepface/actions/workflows/tests.yml)

+

+[](https://sefiks.com)

+[](https://www.youtube.com/@sefiks?sub_confirmation=1)

+[](https://twitter.com/intent/user?screen_name=serengil)

+[](https://www.patreon.com/serengil?repo=deepface)

+[](https://github.com/sponsors/serengil)

+

+[](https://doi.org/10.1109/ASYU50717.2020.9259802)

+[](https://doi.org/10.1109/ICEET53442.2021.9659697)

+

+

+

+

+

+Deepface is a lightweight [face recognition](https://sefiks.com/2018/08/06/deep-face-recognition-with-keras/) and facial attribute analysis ([age](https://sefiks.com/2019/02/13/apparent-age-and-gender-prediction-in-keras/), [gender](https://sefiks.com/2019/02/13/apparent-age-and-gender-prediction-in-keras/), [emotion](https://sefiks.com/2018/01/01/facial-expression-recognition-with-keras/) and [race](https://sefiks.com/2019/11/11/race-and-ethnicity-prediction-in-keras/)) framework for python. It is a hybrid face recognition framework wrapping **state-of-the-art** models: [`VGG-Face`](https://sefiks.com/2018/08/06/deep-face-recognition-with-keras/), [`Google FaceNet`](https://sefiks.com/2018/09/03/face-recognition-with-facenet-in-keras/), [`OpenFace`](https://sefiks.com/2019/07/21/face-recognition-with-openface-in-keras/), [`Facebook DeepFace`](https://sefiks.com/2020/02/17/face-recognition-with-facebook-deepface-in-keras/), [`DeepID`](https://sefiks.com/2020/06/16/face-recognition-with-deepid-in-keras/), [`ArcFace`](https://sefiks.com/2020/12/14/deep-face-recognition-with-arcface-in-keras-and-python/), [`Dlib`](https://sefiks.com/2020/07/11/face-recognition-with-dlib-in-python/) and `SFace`.

+

+Experiments show that human beings have 97.53% accuracy on facial recognition tasks whereas those models already reached and passed that accuracy level.

+

+## Installation [](https://pypi.org/project/deepface/) [](https://anaconda.org/conda-forge/deepface)

+

+The easiest way to install deepface is to download it from [`PyPI`](https://pypi.org/project/deepface/). It's going to install the library itself and its prerequisites as well.

+

+```shell

+$ pip install deepface

+```

+

+Secondly, DeepFace is also available at [`Conda`](https://anaconda.org/conda-forge/deepface). You can alternatively install the package via conda.

+

+```shell

+$ conda install -c conda-forge deepface

+```

+

+Thirdly, you can install deepface from its source code.

+

+```shell

+$ git clone https://github.com/serengil/deepface.git

+$ cd deepface

+$ pip install -e .

+```

+

+Then you will be able to import the library and use its functionalities.

+

+```python

+from deepface import DeepFace

+```

+

+**Facial Recognition** - [`Demo`](https://youtu.be/WnUVYQP4h44)

+

+A modern [**face recognition pipeline**](https://sefiks.com/2020/05/01/a-gentle-introduction-to-face-recognition-in-deep-learning/) consists of 5 common stages: [detect](https://sefiks.com/2020/08/25/deep-face-detection-with-opencv-in-python/), [align](https://sefiks.com/2020/02/23/face-alignment-for-face-recognition-in-python-within-opencv/), [normalize](https://sefiks.com/2020/11/20/facial-landmarks-for-face-recognition-with-dlib/), [represent](https://sefiks.com/2018/08/06/deep-face-recognition-with-keras/) and [verify](https://sefiks.com/2020/05/22/fine-tuning-the-threshold-in-face-recognition/). While Deepface handles all these common stages in the background, you don’t need to acquire in-depth knowledge about all the processes behind it. You can just call its verification, find or analysis function with a single line of code.

+

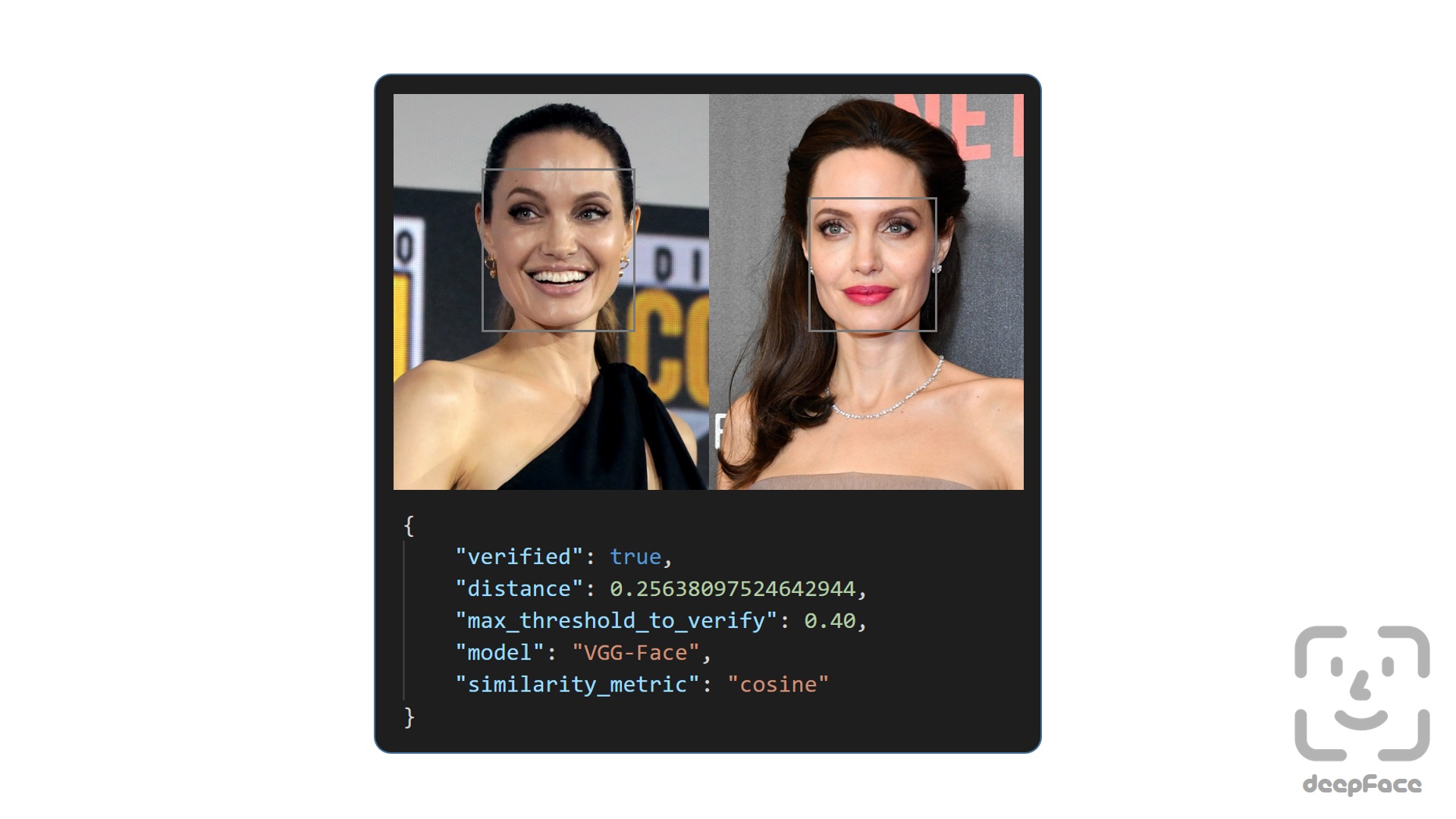

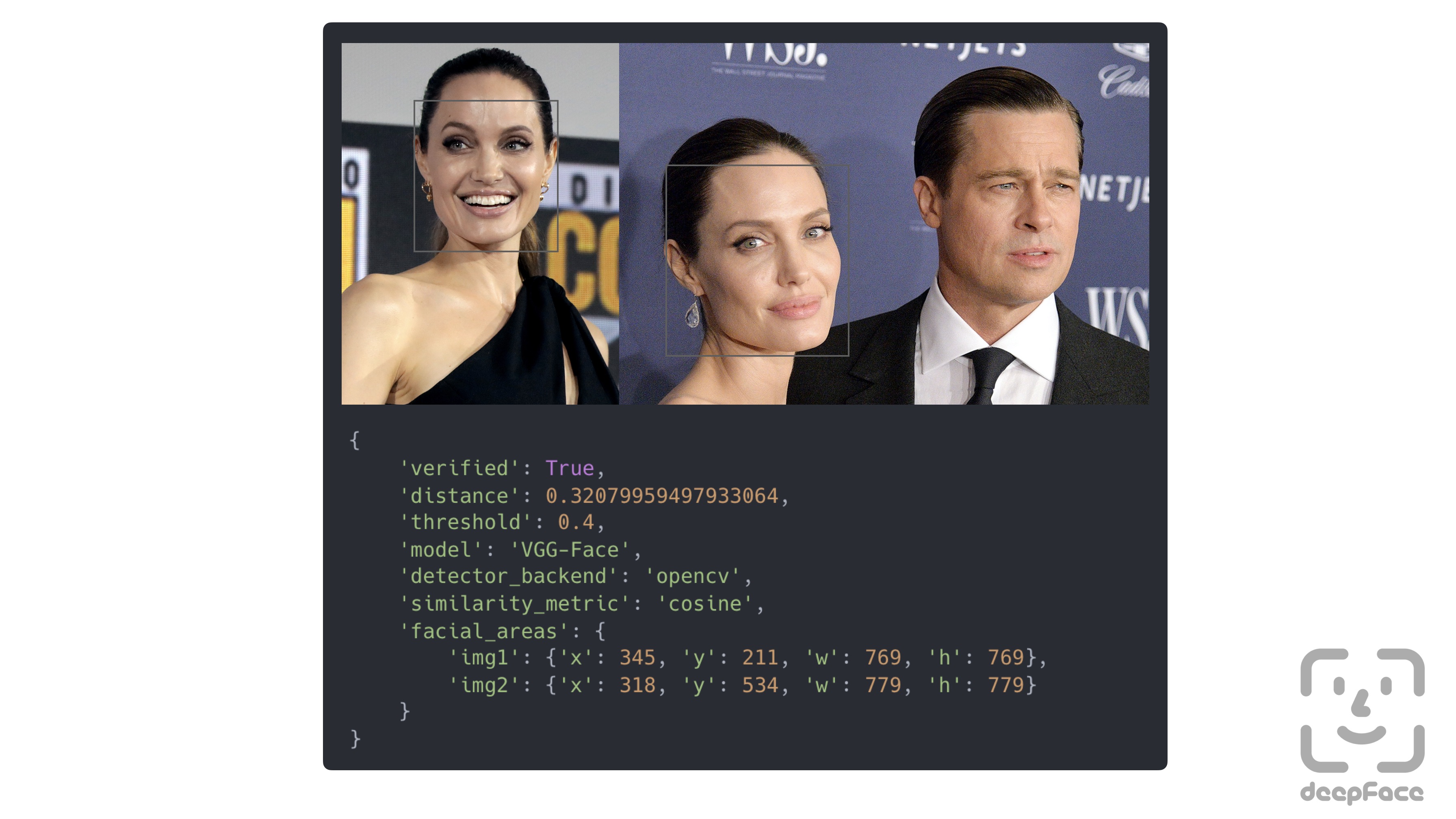

+**Face Verification** - [`Demo`](https://youtu.be/KRCvkNCOphE)

+

+This function verifies face pairs as same person or different persons. It expects exact image paths as inputs. Passing numpy or base64 encoded images is also welcome. Then, it is going to return a dictionary and you should check just its verified key.

+

+```python

+result = DeepFace.verify(img1_path = "img1.jpg", img2_path = "img2.jpg")

+```

+

+

+

+Verification function can also handle many faces in the face pairs. In this case, the most similar faces will be compared.

+

+

+

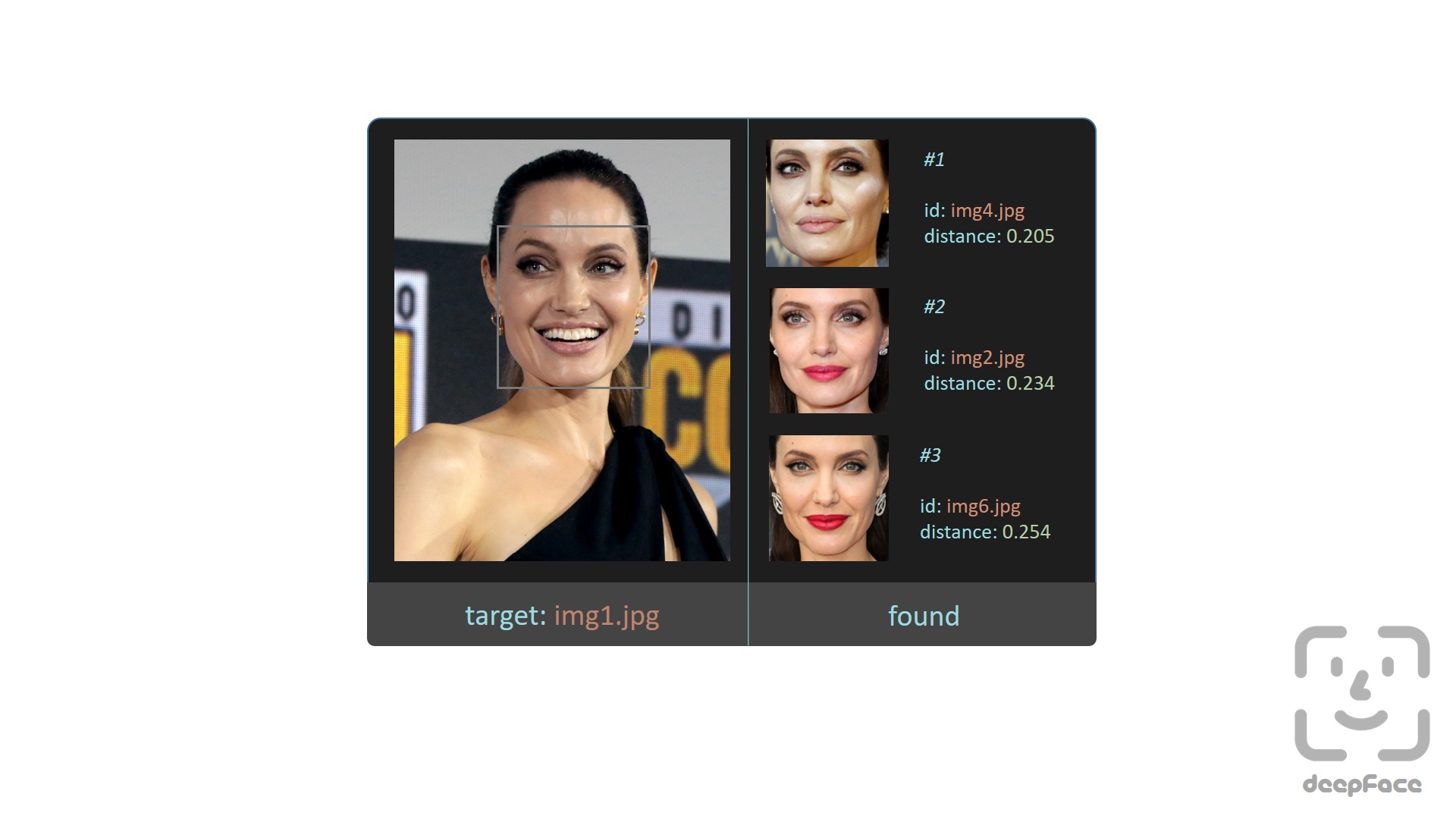

+**Face recognition** - [`Demo`](https://youtu.be/Hrjp-EStM_s)

+

+[Face recognition](https://sefiks.com/2020/05/25/large-scale-face-recognition-for-deep-learning/) requires applying face verification many times. Herein, deepface has an out-of-the-box find function to handle this action. It's going to look for the identity of input image in the database path and it will return list of pandas data frame as output. Meanwhile, facial embeddings of the facial database are stored in a pickle file to be searched faster in next time. Result is going to be the size of faces appearing in the source image. Besides, target images in the database can have many faces as well.

+

+

+```python

+dfs = DeepFace.find(img_path = "img1.jpg", db_path = "C:/workspace/my_db")

+```

+

+

+

+**Embeddings**

+

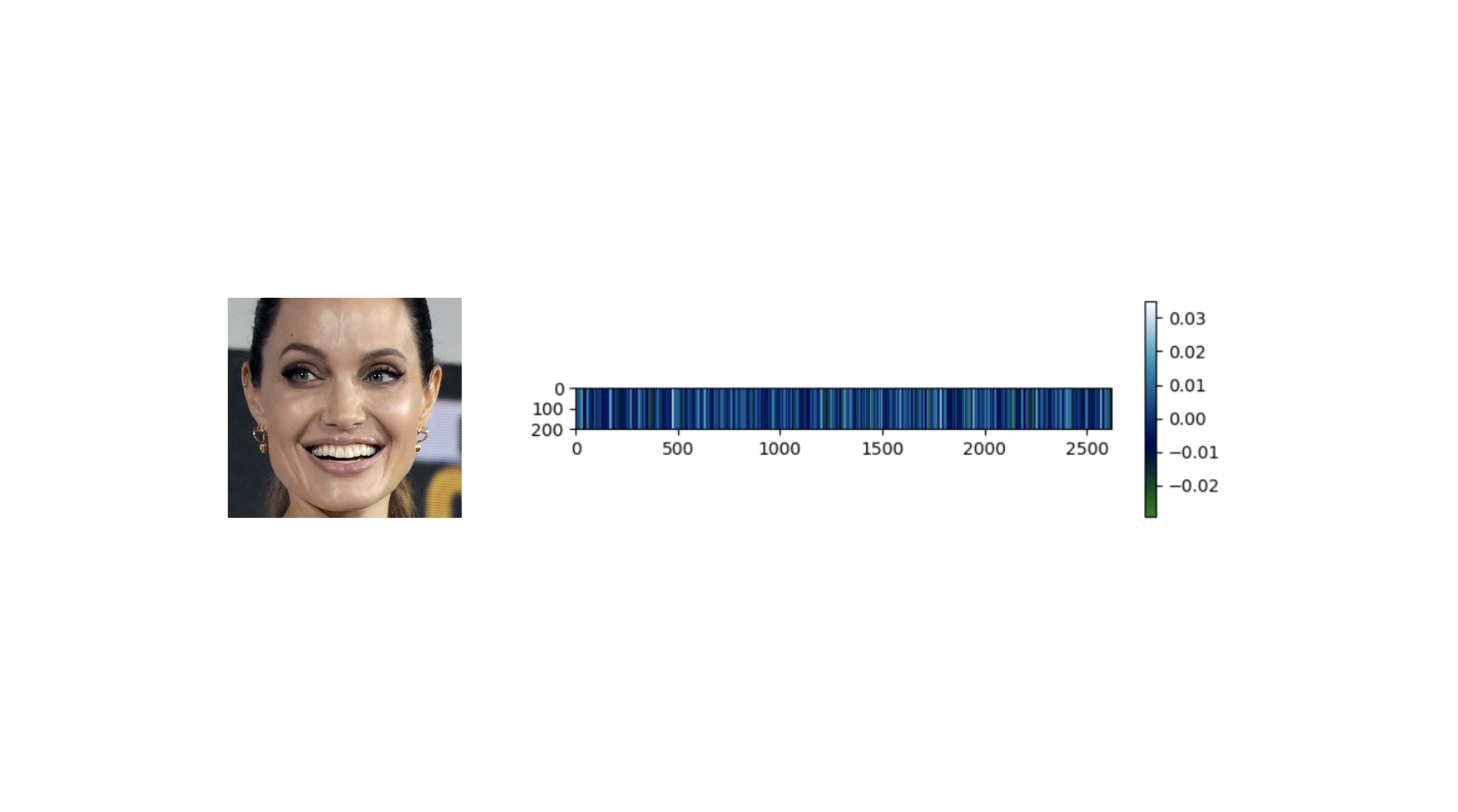

+Face recognition models basically represent facial images as multi-dimensional vectors. Sometimes, you need those embedding vectors directly. DeepFace comes with a dedicated representation function. Represent function returns a list of embeddings. Result is going to be the size of faces appearing in the image path.

+

+```python

+embedding_objs = DeepFace.represent(img_path = "img.jpg")

+```

+

+This function returns an array as embedding. The size of the embedding array would be different based on the model name. For instance, VGG-Face is the default model and it represents facial images as 4096 dimensional vectors.

+

+```python

+embedding = embedding_objs[0]["embedding"]

+assert isinstance(embedding, list)

+assert model_name = "VGG-Face" and len(embedding) == 4096

+```

+

+Here, embedding is also [plotted](https://sefiks.com/2020/05/01/a-gentle-introduction-to-face-recognition-in-deep-learning/) with 4096 slots horizontally. Each slot is corresponding to a dimension value in the embedding vector and dimension value is explained in the colorbar on the right. Similar to 2D barcodes, vertical dimension stores no information in the illustration.

+

+

+

+**Face recognition models** - [`Demo`](https://youtu.be/i_MOwvhbLdI)

+

+Deepface is a **hybrid** face recognition package. It currently wraps many **state-of-the-art** face recognition models: [`VGG-Face`](https://sefiks.com/2018/08/06/deep-face-recognition-with-keras/) , [`Google FaceNet`](https://sefiks.com/2018/09/03/face-recognition-with-facenet-in-keras/), [`OpenFace`](https://sefiks.com/2019/07/21/face-recognition-with-openface-in-keras/), [`Facebook DeepFace`](https://sefiks.com/2020/02/17/face-recognition-with-facebook-deepface-in-keras/), [`DeepID`](https://sefiks.com/2020/06/16/face-recognition-with-deepid-in-keras/), [`ArcFace`](https://sefiks.com/2020/12/14/deep-face-recognition-with-arcface-in-keras-and-python/), [`Dlib`](https://sefiks.com/2020/07/11/face-recognition-with-dlib-in-python/) and `SFace`. The default configuration uses VGG-Face model.

+

+```python

+models = [

+ "VGG-Face",

+ "Facenet",

+ "Facenet512",

+ "OpenFace",

+ "DeepFace",

+ "DeepID",

+ "ArcFace",

+ "Dlib",

+ "SFace",

+]

+

+#face verification

+result = DeepFace.verify(img1_path = "img1.jpg",

+ img2_path = "img2.jpg",

+ model_name = models[0]

+)

+

+#face recognition

+dfs = DeepFace.find(img_path = "img1.jpg",

+ db_path = "C:/workspace/my_db",

+ model_name = models[1]

+)

+

+#embeddings

+embedding_objs = DeepFace.represent(img_path = "img.jpg",

+ model_name = models[2]

+)

+```

+

+

+

+FaceNet, VGG-Face, ArcFace and Dlib are [overperforming](https://youtu.be/i_MOwvhbLdI) ones based on experiments. You can find out the scores of those models below on both [Labeled Faces in the Wild](https://sefiks.com/2020/08/27/labeled-faces-in-the-wild-for-face-recognition/) and YouTube Faces in the Wild data sets declared by its creators.

+

+| Model | LFW Score | YTF Score |

+| --- | --- | --- |

+| Facenet512 | 99.65% | - |

+| SFace | 99.60% | - |

+| ArcFace | 99.41% | - |

+| Dlib | 99.38 % | - |

+| Facenet | 99.20% | - |

+| VGG-Face | 98.78% | 97.40% |

+| *Human-beings* | *97.53%* | - |

+| OpenFace | 93.80% | - |

+| DeepID | - | 97.05% |

+

+**Similarity**

+

+Face recognition models are regular [convolutional neural networks](https://sefiks.com/2018/03/23/convolutional-autoencoder-clustering-images-with-neural-networks/) and they are responsible to represent faces as vectors. We expect that a face pair of same person should be [more similar](https://sefiks.com/2020/05/22/fine-tuning-the-threshold-in-face-recognition/) than a face pair of different persons.

+

+Similarity could be calculated by different metrics such as [Cosine Similarity](https://sefiks.com/2018/08/13/cosine-similarity-in-machine-learning/), Euclidean Distance and L2 form. The default configuration uses cosine similarity.

+

+```python

+metrics = ["cosine", "euclidean", "euclidean_l2"]

+

+#face verification

+result = DeepFace.verify(img1_path = "img1.jpg",

+ img2_path = "img2.jpg",

+ distance_metric = metrics[1]

+)

+

+#face recognition

+dfs = DeepFace.find(img_path = "img1.jpg",

+ db_path = "C:/workspace/my_db",

+ distance_metric = metrics[2]

+)

+```

+

+Euclidean L2 form [seems](https://youtu.be/i_MOwvhbLdI) to be more stable than cosine and regular Euclidean distance based on experiments.

+

+**Facial Attribute Analysis** - [`Demo`](https://youtu.be/GT2UeN85BdA)

+

+Deepface also comes with a strong facial attribute analysis module including [`age`](https://sefiks.com/2019/02/13/apparent-age-and-gender-prediction-in-keras/), [`gender`](https://sefiks.com/2019/02/13/apparent-age-and-gender-prediction-in-keras/), [`facial expression`](https://sefiks.com/2018/01/01/facial-expression-recognition-with-keras/) (including angry, fear, neutral, sad, disgust, happy and surprise) and [`race`](https://sefiks.com/2019/11/11/race-and-ethnicity-prediction-in-keras/) (including asian, white, middle eastern, indian, latino and black) predictions. Result is going to be the size of faces appearing in the source image.

+

+```python

+objs = DeepFace.analyze(img_path = "img4.jpg",

+ actions = ['age', 'gender', 'race', 'emotion']

+)

+```

+

+

+

+Age model got ± 4.65 MAE; gender model got 97.44% accuracy, 96.29% precision and 95.05% recall as mentioned in its [tutorial](https://sefiks.com/2019/02/13/apparent-age-and-gender-prediction-in-keras/).

+

+

+**Face Detectors** - [`Demo`](https://youtu.be/GZ2p2hj2H5k)

+

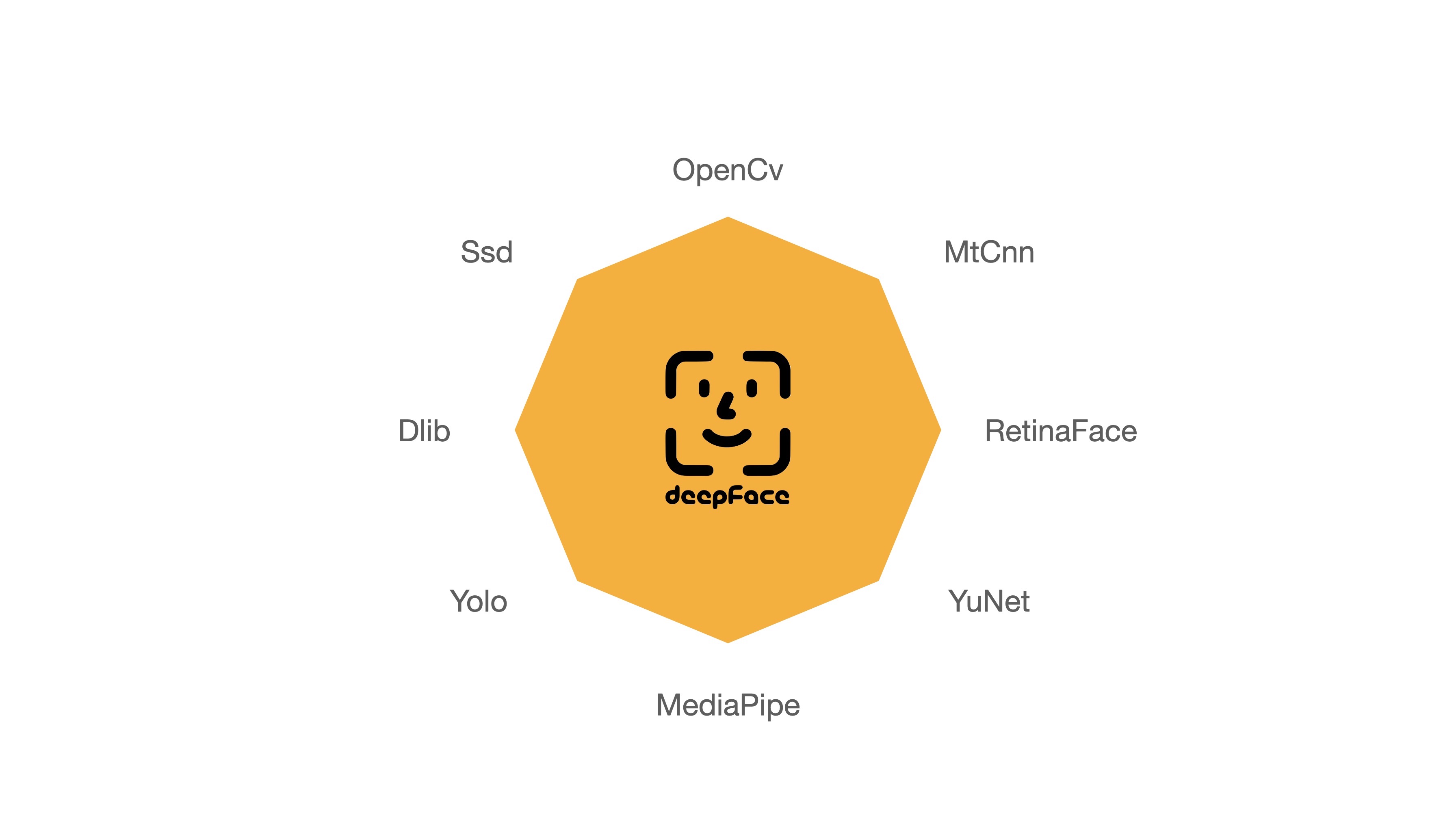

+Face detection and alignment are important early stages of a modern face recognition pipeline. Experiments show that just alignment increases the face recognition accuracy almost 1%. [`OpenCV`](https://sefiks.com/2020/02/23/face-alignment-for-face-recognition-in-python-within-opencv/), [`SSD`](https://sefiks.com/2020/08/25/deep-face-detection-with-opencv-in-python/), [`Dlib`](https://sefiks.com/2020/07/11/face-recognition-with-dlib-in-python/), [`MTCNN`](https://sefiks.com/2020/09/09/deep-face-detection-with-mtcnn-in-python/), [`Faster MTCNN`](https://github.com/timesler/facenet-pytorch), [`RetinaFace`](https://sefiks.com/2021/04/27/deep-face-detection-with-retinaface-in-python/), [`MediaPipe`](https://sefiks.com/2022/01/14/deep-face-detection-with-mediapipe/), [`YOLOv8 Face`](https://github.com/derronqi/yolov8-face) and [`YuNet`](https://github.com/ShiqiYu/libfacedetection) detectors are wrapped in deepface.

+

+

+

+All deepface functions accept an optional detector backend input argument. You can switch among those detectors with this argument. OpenCV is the default detector.

+

+```python

+backends = [

+ 'opencv',

+ 'ssd',

+ 'dlib',

+ 'mtcnn',

+ 'retinaface',

+ 'mediapipe',

+ 'yolov8',

+ 'yunet',

+ 'fastmtcnn',

+]

+

+#face verification

+obj = DeepFace.verify(img1_path = "img1.jpg",

+ img2_path = "img2.jpg",

+ detector_backend = backends[0]

+)

+

+#face recognition

+dfs = DeepFace.find(img_path = "img.jpg",

+ db_path = "my_db",

+ detector_backend = backends[1]

+)

+

+#embeddings

+embedding_objs = DeepFace.represent(img_path = "img.jpg",

+ detector_backend = backends[2]

+)

+

+#facial analysis

+demographies = DeepFace.analyze(img_path = "img4.jpg",

+ detector_backend = backends[3]

+)

+

+#face detection and alignment

+face_objs = DeepFace.extract_faces(img_path = "img.jpg",

+ target_size = (224, 224),

+ detector_backend = backends[4]

+)

+```

+

+Face recognition models are actually CNN models and they expect standard sized inputs. So, resizing is required before representation. To avoid deformation, deepface adds black padding pixels according to the target size argument after detection and alignment.

+

+

+

+[RetinaFace](https://sefiks.com/2021/04/27/deep-face-detection-with-retinaface-in-python/) and [MTCNN](https://sefiks.com/2020/09/09/deep-face-detection-with-mtcnn-in-python/) seem to overperform in detection and alignment stages but they are much slower. If the speed of your pipeline is more important, then you should use opencv or ssd. On the other hand, if you consider the accuracy, then you should use retinaface or mtcnn.

+

+The performance of RetinaFace is very satisfactory even in the crowd as seen in the following illustration. Besides, it comes with an incredible facial landmark detection performance. Highlighted red points show some facial landmarks such as eyes, nose and mouth. That's why, alignment score of RetinaFace is high as well.

+

+ +

+

The Yellow Angels - Fenerbahce Women's Volleyball Team

+

+

+You can find out more about RetinaFace on this [repo](https://github.com/serengil/retinaface).

+

+**Real Time Analysis** - [`Demo`](https://youtu.be/-c9sSJcx6wI)

+

+You can run deepface for real time videos as well. Stream function will access your webcam and apply both face recognition and facial attribute analysis. The function starts to analyze a frame if it can focus a face sequentially 5 frames. Then, it shows results 5 seconds.

+

+```python

+DeepFace.stream(db_path = "C:/User/Sefik/Desktop/database")

+```

+

+

+

+Even though face recognition is based on one-shot learning, you can use multiple face pictures of a person as well. You should rearrange your directory structure as illustrated below.

+

+```bash

+user

+├── database

+│ ├── Alice

+│ │ ├── Alice1.jpg

+│ │ ├── Alice2.jpg

+│ ├── Bob

+│ │ ├── Bob.jpg

+```

+

+**API** - [`Demo`](https://youtu.be/HeKCQ6U9XmI)

+

+DeepFace serves an API as well. You can clone [`/api`](https://github.com/serengil/deepface/tree/master/api) folder and run the api via gunicorn server. This will get a rest service up. In this way, you can call deepface from an external system such as mobile app or web.

+

+```shell

+cd scripts

+./service.sh

+```

+

+

+

+Face recognition, facial attribute analysis and vector representation functions are covered in the API. You are expected to call these functions as http post methods. Default service endpoints will be `http://localhost:5000/verify` for face recognition, `http://localhost:5000/analyze` for facial attribute analysis, and `http://localhost:5000/represent` for vector representation. You can pass input images as exact image paths on your environment, base64 encoded strings or images on web. [Here](https://github.com/serengil/deepface/tree/master/api), you can find a postman project to find out how these methods should be called.

+

+**Dockerized Service**

+

+You can deploy the deepface api on a kubernetes cluster with docker. The following [shell script](https://github.com/serengil/deepface/blob/master/scripts/dockerize.sh) will serve deepface on `localhost:5000`. You need to re-configure the [Dockerfile](https://github.com/serengil/deepface/blob/master/Dockerfile) if you want to change the port. Then, even if you do not have a development environment, you will be able to consume deepface services such as verify and analyze. You can also access the inside of the docker image to run deepface related commands. Please follow the instructions in the [shell script](https://github.com/serengil/deepface/blob/master/scripts/dockerize.sh).

+

+```shell

+cd scripts

+./dockerize.sh

+```

+

+

+

+**Command Line Interface** - [`Demo`](https://youtu.be/PKKTAr3ts2s)

+

+DeepFace comes with a command line interface as well. You are able to access its functions in command line as shown below. The command deepface expects the function name as 1st argument and function arguments thereafter.

+

+```shell

+#face verification

+$ deepface verify -img1_path tests/dataset/img1.jpg -img2_path tests/dataset/img2.jpg

+

+#facial analysis

+$ deepface analyze -img_path tests/dataset/img1.jpg

+```

+

+You can also run these commands if you are running deepface with docker. Please follow the instructions in the [shell script](https://github.com/serengil/deepface/blob/master/scripts/dockerize.sh#L17).

+

+## Contribution [](https://github.com/serengil/deepface/actions/workflows/tests.yml)

+

+Pull requests are more than welcome! You should run the unit tests locally by running [`test/unit_tests.py`](https://github.com/serengil/deepface/blob/master/tests/unit_tests.py) before creating a PR. Once a PR sent, GitHub test workflow will be run automatically and unit test results will be available in [GitHub actions](https://github.com/serengil/deepface/actions) before approval. Besides, workflow will evaluate the code with pylint as well.

+

+## Support

+

+There are many ways to support a project - starring⭐️ the GitHub repo is just one 🙏

+

+You can also support this work on [Patreon](https://www.patreon.com/serengil?repo=deepface) or [GitHub Sponsors](https://github.com/sponsors/serengil).

+

+

+ +

+

+## Citation

+

+Please cite deepface in your publications if it helps your research. Here are its BibTex entries:

+

+If you use deepface for facial recogntion purposes, please cite the this publication.

+

+```BibTeX

+@inproceedings{serengil2020lightface,

+ title = {LightFace: A Hybrid Deep Face Recognition Framework},

+ author = {Serengil, Sefik Ilkin and Ozpinar, Alper},

+ booktitle = {2020 Innovations in Intelligent Systems and Applications Conference (ASYU)},

+ pages = {23-27},

+ year = {2020},

+ doi = {10.1109/ASYU50717.2020.9259802},

+ url = {https://doi.org/10.1109/ASYU50717.2020.9259802},

+ organization = {IEEE}

+}

+```

+

+ If you use deepface for facial attribute analysis purposes such as age, gender, emotion or ethnicity prediction or face detection purposes, please cite the this publication.

+

+```BibTeX

+@inproceedings{serengil2021lightface,

+ title = {HyperExtended LightFace: A Facial Attribute Analysis Framework},

+ author = {Serengil, Sefik Ilkin and Ozpinar, Alper},

+ booktitle = {2021 International Conference on Engineering and Emerging Technologies (ICEET)},

+ pages = {1-4},

+ year = {2021},

+ doi = {10.1109/ICEET53442.2021.9659697},

+ url = {https://doi.org/10.1109/ICEET53442.2021.9659697},

+ organization = {IEEE}

+}

+```

+

+Also, if you use deepface in your GitHub projects, please add `deepface` in the `requirements.txt`.

+

+## Licence

+

+DeepFace is licensed under the MIT License - see [`LICENSE`](https://github.com/serengil/deepface/blob/master/LICENSE) for more details.

+

+DeepFace wraps some external face recognition models: [VGG-Face](http://www.robots.ox.ac.uk/~vgg/software/vgg_face/), [Facenet](https://github.com/davidsandberg/facenet/blob/master/LICENSE.md), [OpenFace](https://github.com/iwantooxxoox/Keras-OpenFace/blob/master/LICENSE), [DeepFace](https://github.com/swghosh/DeepFace), [DeepID](https://github.com/Ruoyiran/DeepID/blob/master/LICENSE.md), [ArcFace](https://github.com/leondgarse/Keras_insightface/blob/master/LICENSE), [Dlib](https://github.com/davisking/dlib/blob/master/dlib/LICENSE.txt), and [SFace](https://github.com/opencv/opencv_zoo/blob/master/models/face_recognition_sface/LICENSE). Besides, age, gender and race / ethnicity models were trained on the backbone of VGG-Face with transfer learning. Licence types will be inherited if you are going to use those models. Please check the license types of those models for production purposes.

+

+DeepFace [logo](https://thenounproject.com/term/face-recognition/2965879/) is created by [Adrien Coquet](https://thenounproject.com/coquet_adrien/) and it is licensed under [Creative Commons: By Attribution 3.0 License](https://creativecommons.org/licenses/by/3.0/).

diff --git a/modules/deepface-master/api/api.py b/modules/deepface-master/api/api.py

new file mode 100644

index 000000000..e6f66832e

--- /dev/null

+++ b/modules/deepface-master/api/api.py

@@ -0,0 +1,9 @@

+import argparse

+import app

+

+if __name__ == "__main__":

+ deepface_app = app.create_app()

+ parser = argparse.ArgumentParser()

+ parser.add_argument("-p", "--port", type=int, default=5000, help="Port of serving api")

+ args = parser.parse_args()

+ deepface_app.run(host="0.0.0.0", port=args.port)

diff --git a/modules/deepface-master/api/app.py b/modules/deepface-master/api/app.py

new file mode 100644

index 000000000..7ad23ee11

--- /dev/null

+++ b/modules/deepface-master/api/app.py

@@ -0,0 +1,9 @@

+# 3rd parth dependencies

+from flask import Flask

+from routes import blueprint

+

+

+def create_app():

+ app = Flask(__name__)

+ app.register_blueprint(blueprint)

+ return app

diff --git a/modules/deepface-master/api/deepface-api.postman_collection.json b/modules/deepface-master/api/deepface-api.postman_collection.json

new file mode 100644

index 000000000..0cbb0a388

--- /dev/null

+++ b/modules/deepface-master/api/deepface-api.postman_collection.json

@@ -0,0 +1,102 @@

+{

+ "info": {

+ "_postman_id": "4c0b144e-4294-4bdd-8072-bcb326b1fed2",

+ "name": "deepface-api",

+ "schema": "https://schema.getpostman.com/json/collection/v2.1.0/collection.json"

+ },

+ "item": [

+ {

+ "name": "Represent",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": "{\n \"model_name\": \"Facenet\",\n \"img\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\"\n}",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/represent",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "represent"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face verification",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": " {\n \t\"img1_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\",\n \"img2_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img2.jpg\",\n \"model_name\": \"Facenet\",\n \"detector_backend\": \"mtcnn\",\n \"distance_metric\": \"euclidean\"\n }",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/verify",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "verify"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face analysis",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": "{\n \"img_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/couple.jpg\",\n \"actions\": [\"age\", \"gender\", \"emotion\", \"race\"]\n}",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/analyze",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "analyze"

+ ]

+ }

+ },

+ "response": []

+ }

+ ]

+}

\ No newline at end of file

diff --git a/modules/deepface-master/api/routes.py b/modules/deepface-master/api/routes.py

new file mode 100644

index 000000000..45808320b

--- /dev/null

+++ b/modules/deepface-master/api/routes.py

@@ -0,0 +1,100 @@

+from flask import Blueprint, request

+import service

+

+blueprint = Blueprint("routes", __name__)

+

+

+@blueprint.route("/")

+def home():

+ return "

+

+

+## Citation

+

+Please cite deepface in your publications if it helps your research. Here are its BibTex entries:

+

+If you use deepface for facial recogntion purposes, please cite the this publication.

+

+```BibTeX

+@inproceedings{serengil2020lightface,

+ title = {LightFace: A Hybrid Deep Face Recognition Framework},

+ author = {Serengil, Sefik Ilkin and Ozpinar, Alper},

+ booktitle = {2020 Innovations in Intelligent Systems and Applications Conference (ASYU)},

+ pages = {23-27},

+ year = {2020},

+ doi = {10.1109/ASYU50717.2020.9259802},

+ url = {https://doi.org/10.1109/ASYU50717.2020.9259802},

+ organization = {IEEE}

+}

+```

+

+ If you use deepface for facial attribute analysis purposes such as age, gender, emotion or ethnicity prediction or face detection purposes, please cite the this publication.

+

+```BibTeX

+@inproceedings{serengil2021lightface,

+ title = {HyperExtended LightFace: A Facial Attribute Analysis Framework},

+ author = {Serengil, Sefik Ilkin and Ozpinar, Alper},

+ booktitle = {2021 International Conference on Engineering and Emerging Technologies (ICEET)},

+ pages = {1-4},

+ year = {2021},

+ doi = {10.1109/ICEET53442.2021.9659697},

+ url = {https://doi.org/10.1109/ICEET53442.2021.9659697},

+ organization = {IEEE}

+}

+```

+

+Also, if you use deepface in your GitHub projects, please add `deepface` in the `requirements.txt`.

+

+## Licence

+

+DeepFace is licensed under the MIT License - see [`LICENSE`](https://github.com/serengil/deepface/blob/master/LICENSE) for more details.

+

+DeepFace wraps some external face recognition models: [VGG-Face](http://www.robots.ox.ac.uk/~vgg/software/vgg_face/), [Facenet](https://github.com/davidsandberg/facenet/blob/master/LICENSE.md), [OpenFace](https://github.com/iwantooxxoox/Keras-OpenFace/blob/master/LICENSE), [DeepFace](https://github.com/swghosh/DeepFace), [DeepID](https://github.com/Ruoyiran/DeepID/blob/master/LICENSE.md), [ArcFace](https://github.com/leondgarse/Keras_insightface/blob/master/LICENSE), [Dlib](https://github.com/davisking/dlib/blob/master/dlib/LICENSE.txt), and [SFace](https://github.com/opencv/opencv_zoo/blob/master/models/face_recognition_sface/LICENSE). Besides, age, gender and race / ethnicity models were trained on the backbone of VGG-Face with transfer learning. Licence types will be inherited if you are going to use those models. Please check the license types of those models for production purposes.

+

+DeepFace [logo](https://thenounproject.com/term/face-recognition/2965879/) is created by [Adrien Coquet](https://thenounproject.com/coquet_adrien/) and it is licensed under [Creative Commons: By Attribution 3.0 License](https://creativecommons.org/licenses/by/3.0/).

diff --git a/modules/deepface-master/api/api.py b/modules/deepface-master/api/api.py

new file mode 100644

index 000000000..e6f66832e

--- /dev/null

+++ b/modules/deepface-master/api/api.py

@@ -0,0 +1,9 @@

+import argparse

+import app

+

+if __name__ == "__main__":

+ deepface_app = app.create_app()

+ parser = argparse.ArgumentParser()

+ parser.add_argument("-p", "--port", type=int, default=5000, help="Port of serving api")

+ args = parser.parse_args()

+ deepface_app.run(host="0.0.0.0", port=args.port)

diff --git a/modules/deepface-master/api/app.py b/modules/deepface-master/api/app.py

new file mode 100644

index 000000000..7ad23ee11

--- /dev/null

+++ b/modules/deepface-master/api/app.py

@@ -0,0 +1,9 @@

+# 3rd parth dependencies

+from flask import Flask

+from routes import blueprint

+

+

+def create_app():

+ app = Flask(__name__)

+ app.register_blueprint(blueprint)

+ return app

diff --git a/modules/deepface-master/api/deepface-api.postman_collection.json b/modules/deepface-master/api/deepface-api.postman_collection.json

new file mode 100644

index 000000000..0cbb0a388

--- /dev/null

+++ b/modules/deepface-master/api/deepface-api.postman_collection.json

@@ -0,0 +1,102 @@

+{

+ "info": {

+ "_postman_id": "4c0b144e-4294-4bdd-8072-bcb326b1fed2",

+ "name": "deepface-api",

+ "schema": "https://schema.getpostman.com/json/collection/v2.1.0/collection.json"

+ },

+ "item": [

+ {

+ "name": "Represent",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": "{\n \"model_name\": \"Facenet\",\n \"img\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\"\n}",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/represent",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "represent"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face verification",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": " {\n \t\"img1_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img1.jpg\",\n \"img2_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/img2.jpg\",\n \"model_name\": \"Facenet\",\n \"detector_backend\": \"mtcnn\",\n \"distance_metric\": \"euclidean\"\n }",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/verify",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "verify"

+ ]

+ }

+ },

+ "response": []

+ },

+ {

+ "name": "Face analysis",

+ "request": {

+ "method": "POST",

+ "header": [],

+ "body": {

+ "mode": "raw",

+ "raw": "{\n \"img_path\": \"/Users/sefik/Desktop/deepface/tests/dataset/couple.jpg\",\n \"actions\": [\"age\", \"gender\", \"emotion\", \"race\"]\n}",

+ "options": {

+ "raw": {

+ "language": "json"

+ }

+ }

+ },

+ "url": {

+ "raw": "http://127.0.0.1:5000/analyze",

+ "protocol": "http",

+ "host": [

+ "127",

+ "0",

+ "0",

+ "1"

+ ],

+ "port": "5000",

+ "path": [

+ "analyze"

+ ]

+ }

+ },

+ "response": []

+ }

+ ]

+}

\ No newline at end of file

diff --git a/modules/deepface-master/api/routes.py b/modules/deepface-master/api/routes.py

new file mode 100644

index 000000000..45808320b

--- /dev/null

+++ b/modules/deepface-master/api/routes.py

@@ -0,0 +1,100 @@

+from flask import Blueprint, request

+import service

+

+blueprint = Blueprint("routes", __name__)

+

+

+@blueprint.route("/")

+def home():

+ return "Welcome to DeepFace API!

"

+

+

+@blueprint.route("/represent", methods=["POST"])

+def represent():

+ input_args = request.get_json()

+

+ if input_args is None:

+ return {"message": "empty input set passed"}

+

+ img_path = input_args.get("img")

+ if img_path is None:

+ return {"message": "you must pass img_path input"}

+

+ model_name = input_args.get("model_name", "VGG-Face")

+ detector_backend = input_args.get("detector_backend", "opencv")

+ enforce_detection = input_args.get("enforce_detection", True)

+ align = input_args.get("align", True)

+

+ obj = service.represent(

+ img_path=img_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ )

+

+ return obj

+

+

+@blueprint.route("/verify", methods=["POST"])

+def verify():

+ input_args = request.get_json()

+

+ if input_args is None:

+ return {"message": "empty input set passed"}

+

+ img1_path = input_args.get("img1_path")

+ img2_path = input_args.get("img2_path")

+

+ if img1_path is None:

+ return {"message": "you must pass img1_path input"}

+

+ if img2_path is None:

+ return {"message": "you must pass img2_path input"}

+

+ model_name = input_args.get("model_name", "VGG-Face")

+ detector_backend = input_args.get("detector_backend", "opencv")

+ enforce_detection = input_args.get("enforce_detection", True)

+ distance_metric = input_args.get("distance_metric", "cosine")

+ align = input_args.get("align", True)

+

+ verification = service.verify(

+ img1_path=img1_path,

+ img2_path=img2_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ distance_metric=distance_metric,

+ align=align,

+ enforce_detection=enforce_detection,

+ )

+

+ verification["verified"] = str(verification["verified"])

+

+ return verification

+

+

+@blueprint.route("/analyze", methods=["POST"])

+def analyze():

+ input_args = request.get_json()

+

+ if input_args is None:

+ return {"message": "empty input set passed"}

+

+ img_path = input_args.get("img_path")

+ if img_path is None:

+ return {"message": "you must pass img_path input"}

+

+ detector_backend = input_args.get("detector_backend", "opencv")

+ enforce_detection = input_args.get("enforce_detection", True)

+ align = input_args.get("align", True)

+ actions = input_args.get("actions", ["age", "gender", "emotion", "race"])

+

+ demographies = service.analyze(

+ img_path=img_path,

+ actions=actions,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ )

+

+ return demographies

diff --git a/modules/deepface-master/api/service.py b/modules/deepface-master/api/service.py

new file mode 100644

index 000000000..f3a9bbc95

--- /dev/null

+++ b/modules/deepface-master/api/service.py

@@ -0,0 +1,42 @@

+from deepface import DeepFace

+

+

+def represent(img_path, model_name, detector_backend, enforce_detection, align):

+ result = {}

+ embedding_objs = DeepFace.represent(

+ img_path=img_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ )

+ result["results"] = embedding_objs

+ return result

+

+

+def verify(

+ img1_path, img2_path, model_name, detector_backend, distance_metric, enforce_detection, align

+):

+ obj = DeepFace.verify(

+ img1_path=img1_path,

+ img2_path=img2_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ distance_metric=distance_metric,

+ align=align,

+ enforce_detection=enforce_detection,

+ )

+ return obj

+

+

+def analyze(img_path, actions, detector_backend, enforce_detection, align):

+ result = {}

+ demographies = DeepFace.analyze(

+ img_path=img_path,

+ actions=actions,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ )

+ result["results"] = demographies

+ return result

diff --git a/modules/deepface-master/deepface/DeepFace.py b/modules/deepface-master/deepface/DeepFace.py

new file mode 100644

index 000000000..84d5f42fc

--- /dev/null

+++ b/modules/deepface-master/deepface/DeepFace.py

@@ -0,0 +1,485 @@

+# common dependencies

+import os

+import warnings

+import logging

+from typing import Any, Dict, List, Tuple, Union

+

+# 3rd party dependencies

+import numpy as np

+import pandas as pd

+import tensorflow as tf

+from deprecated import deprecated

+

+# package dependencies

+from deepface.commons import functions

+from deepface.commons.logger import Logger

+from deepface.modules import (

+ modeling,

+ representation,

+ verification,

+ recognition,

+ demography,

+ detection,

+ realtime,

+)

+

+# pylint: disable=no-else-raise, simplifiable-if-expression

+

+logger = Logger(module="DeepFace")

+

+# -----------------------------------

+# configurations for dependencies

+

+warnings.filterwarnings("ignore")

+os.environ["TF_CPP_MIN_LOG_LEVEL"] = "3"

+tf_version = int(tf.__version__.split(".", maxsplit=1)[0])

+if tf_version == 2:

+ tf.get_logger().setLevel(logging.ERROR)

+ from tensorflow.keras.models import Model

+else:

+ from keras.models import Model

+# -----------------------------------

+

+

+def build_model(model_name: str) -> Union[Model, Any]:

+ """

+ This function builds a deepface model

+ Parameters:

+ model_name (string): face recognition or facial attribute model

+ VGG-Face, Facenet, OpenFace, DeepFace, DeepID for face recognition

+ Age, Gender, Emotion, Race for facial attributes

+

+ Returns:

+ built deepface model ( (tf.)keras.models.Model )

+ """

+ return modeling.build_model(model_name=model_name)

+

+

+def verify(

+ img1_path: Union[str, np.ndarray],

+ img2_path: Union[str, np.ndarray],

+ model_name: str = "VGG-Face",

+ detector_backend: str = "opencv",

+ distance_metric: str = "cosine",

+ enforce_detection: bool = True,

+ align: bool = True,

+ normalization: str = "base",

+) -> Dict[str, Any]:

+ """

+ This function verifies an image pair is same person or different persons. In the background,

+ verification function represents facial images as vectors and then calculates the similarity

+ between those vectors. Vectors of same person images should have more similarity (or less

+ distance) than vectors of different persons.

+

+ Parameters:

+ img1_path, img2_path: exact image path as string. numpy array (BGR) or based64 encoded

+ images are also welcome. If one of pair has more than one face, then we will compare the

+ face pair with max similarity.

+

+ model_name (str): VGG-Face, Facenet, Facenet512, OpenFace, DeepFace, DeepID, Dlib

+ , ArcFace and SFace

+

+ distance_metric (string): cosine, euclidean, euclidean_l2

+

+ enforce_detection (boolean): If no face could not be detected in an image, then this

+ function will return exception by default. Set this to False not to have this exception.

+ This might be convenient for low resolution images.

+

+ detector_backend (string): set face detector backend to opencv, retinaface, mtcnn, ssd,

+ dlib, mediapipe or yolov8.

+

+ align (boolean): alignment according to the eye positions.

+

+ normalization (string): normalize the input image before feeding to model

+

+ Returns:

+ Verify function returns a dictionary.

+

+ {

+ "verified": True

+ , "distance": 0.2563

+ , "max_threshold_to_verify": 0.40

+ , "model": "VGG-Face"

+ , "similarity_metric": "cosine"

+ , 'facial_areas': {

+ 'img1': {'x': 345, 'y': 211, 'w': 769, 'h': 769},

+ 'img2': {'x': 318, 'y': 534, 'w': 779, 'h': 779}

+ }

+ , "time": 2

+ }

+

+ """

+

+ return verification.verify(

+ img1_path=img1_path,

+ img2_path=img2_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ distance_metric=distance_metric,

+ enforce_detection=enforce_detection,

+ align=align,

+ normalization=normalization,

+ )

+

+

+def analyze(

+ img_path: Union[str, np.ndarray],

+ actions: Union[tuple, list] = ("emotion", "age", "gender", "race"),

+ enforce_detection: bool = True,

+ detector_backend: str = "opencv",

+ align: bool = True,

+ silent: bool = False,

+) -> List[Dict[str, Any]]:

+ """

+ This function analyzes facial attributes including age, gender, emotion and race.

+ In the background, analysis function builds convolutional neural network models to

+ classify age, gender, emotion and race of the input image.

+

+ Parameters:

+ img_path: exact image path, numpy array (BGR) or base64 encoded image could be passed.

+ If source image has more than one face, then result will be size of number of faces

+ appearing in the image.

+

+ actions (tuple): The default is ('age', 'gender', 'emotion', 'race'). You can drop

+ some of those attributes.

+

+ enforce_detection (bool): The function throws exception if no face detected by default.

+ Set this to False if you don't want to get exception. This might be convenient for low

+ resolution images.

+

+ detector_backend (string): set face detector backend to opencv, retinaface, mtcnn, ssd,

+ dlib, mediapipe or yolov8.

+

+ align (boolean): alignment according to the eye positions.

+

+ silent (boolean): disable (some) log messages

+

+ Returns:

+ The function returns a list of dictionaries for each face appearing in the image.

+

+ [

+ {

+ "region": {'x': 230, 'y': 120, 'w': 36, 'h': 45},

+ "age": 28.66,

+ 'face_confidence': 0.9993908405303955,

+ "dominant_gender": "Woman",

+ "gender": {

+ 'Woman': 99.99407529830933,

+ 'Man': 0.005928758764639497,

+ }

+ "dominant_emotion": "neutral",

+ "emotion": {

+ 'sad': 37.65260875225067,

+ 'angry': 0.15512987738475204,

+ 'surprise': 0.0022171278033056296,

+ 'fear': 1.2489334680140018,

+ 'happy': 4.609785228967667,

+ 'disgust': 9.698561953541684e-07,

+ 'neutral': 56.33133053779602

+ }

+ "dominant_race": "white",

+ "race": {

+ 'indian': 0.5480832420289516,

+ 'asian': 0.7830780930817127,

+ 'latino hispanic': 2.0677512511610985,

+ 'black': 0.06337375962175429,

+ 'middle eastern': 3.088453598320484,

+ 'white': 93.44925880432129

+ }

+ }

+ ]

+ """

+ return demography.analyze(

+ img_path=img_path,

+ actions=actions,

+ enforce_detection=enforce_detection,

+ detector_backend=detector_backend,

+ align=align,

+ silent=silent,

+ )

+

+

+def find(

+ img_path: Union[str, np.ndarray],

+ db_path: str,

+ model_name: str = "VGG-Face",

+ distance_metric: str = "cosine",

+ enforce_detection: bool = True,

+ detector_backend: str = "opencv",

+ align: bool = True,

+ normalization: str = "base",

+ silent: bool = False,

+) -> List[pd.DataFrame]:

+ """

+ This function applies verification several times and find the identities in a database

+

+ Parameters:

+ img_path: exact image path, numpy array (BGR) or based64 encoded image.

+ Source image can have many faces. Then, result will be the size of number of

+ faces in the source image.

+

+ db_path (string): You should store some image files in a folder and pass the

+ exact folder path to this. A database image can also have many faces.

+ Then, all detected faces in db side will be considered in the decision.

+

+ model_name (string): VGG-Face, Facenet, Facenet512, OpenFace, DeepFace, DeepID,

+ Dlib, ArcFace, SFace or Ensemble

+

+ distance_metric (string): cosine, euclidean, euclidean_l2

+

+ enforce_detection (bool): The function throws exception if a face could not be detected.

+ Set this to False if you don't want to get exception. This might be convenient for low

+ resolution images.

+

+ detector_backend (string): set face detector backend to opencv, retinaface, mtcnn, ssd,

+ dlib, mediapipe or yolov8.

+

+ align (boolean): alignment according to the eye positions.

+

+ normalization (string): normalize the input image before feeding to model

+

+ silent (boolean): disable some logging and progress bars

+

+ Returns:

+ This function returns list of pandas data frame. Each item of the list corresponding to

+ an identity in the img_path.

+ """

+ return recognition.find(

+ img_path=img_path,

+ db_path=db_path,

+ model_name=model_name,

+ distance_metric=distance_metric,

+ enforce_detection=enforce_detection,

+ detector_backend=detector_backend,

+ align=align,

+ normalization=normalization,

+ silent=silent,

+ )

+

+

+def represent(

+ img_path: Union[str, np.ndarray],

+ model_name: str = "VGG-Face",

+ enforce_detection: bool = True,

+ detector_backend: str = "opencv",

+ align: bool = True,

+ normalization: str = "base",

+) -> List[Dict[str, Any]]:

+ """

+ This function represents facial images as vectors. The function uses convolutional neural

+ networks models to generate vector embeddings.

+

+ Parameters:

+ img_path (string): exact image path. Alternatively, numpy array (BGR) or based64

+ encoded images could be passed. Source image can have many faces. Then, result will

+ be the size of number of faces appearing in the source image.

+

+ model_name (string): VGG-Face, Facenet, Facenet512, OpenFace, DeepFace, DeepID, Dlib,

+ ArcFace, SFace

+

+ enforce_detection (boolean): If no face could not be detected in an image, then this

+ function will return exception by default. Set this to False not to have this exception.

+ This might be convenient for low resolution images.

+

+ detector_backend (string): set face detector backend to opencv, retinaface, mtcnn, ssd,

+ dlib, mediapipe or yolov8. A special value `skip` could be used to skip face-detection

+ and only encode the given image.

+

+ align (boolean): alignment according to the eye positions.

+

+ normalization (string): normalize the input image before feeding to model

+

+ Returns:

+ Represent function returns a list of object, each object has fields as follows:

+ {

+ // Multidimensional vector

+ // The number of dimensions is changing based on the reference model.

+ // E.g. FaceNet returns 128 dimensional vector;

+ // VGG-Face returns 2622 dimensional vector.

+ "embedding": np.array,

+

+ // Detected Facial-Area by Face detection in dict format.

+ // (x, y) is left-corner point, and (w, h) is the width and height

+ // If `detector_backend` == `skip`, it is the full image area and nonsense.

+ "facial_area": dict{"x": int, "y": int, "w": int, "h": int},

+

+ // Face detection confidence.

+ // If `detector_backend` == `skip`, will be 0 and nonsense.

+ "face_confidence": float

+ }

+ """

+ return representation.represent(

+ img_path=img_path,

+ model_name=model_name,

+ enforce_detection=enforce_detection,

+ detector_backend=detector_backend,

+ align=align,

+ normalization=normalization,

+ )

+

+

+def stream(

+ db_path: str = "",

+ model_name: str = "VGG-Face",

+ detector_backend: str = "opencv",

+ distance_metric: str = "cosine",

+ enable_face_analysis: bool = True,

+ source: Any = 0,

+ time_threshold: int = 5,

+ frame_threshold: int = 5,

+) -> None:

+ """

+ This function applies real time face recognition and facial attribute analysis

+

+ Parameters:

+ db_path (string): facial database path. You should store some .jpg files in this folder.

+

+ model_name (string): VGG-Face, Facenet, Facenet512, OpenFace, DeepFace, DeepID, Dlib,

+ ArcFace, SFace

+

+ detector_backend (string): opencv, retinaface, mtcnn, ssd, dlib, mediapipe or yolov8.

+

+ distance_metric (string): cosine, euclidean, euclidean_l2

+

+ enable_facial_analysis (boolean): Set this to False to just run face recognition

+

+ source: Set this to 0 for access web cam. Otherwise, pass exact video path.

+

+ time_threshold (int): how many second analyzed image will be displayed

+

+ frame_threshold (int): how many frames required to focus on face

+

+ """

+

+ time_threshold = max(time_threshold, 1)

+ frame_threshold = max(frame_threshold, 1)

+

+ realtime.analysis(

+ db_path=db_path,

+ model_name=model_name,

+ detector_backend=detector_backend,

+ distance_metric=distance_metric,

+ enable_face_analysis=enable_face_analysis,

+ source=source,

+ time_threshold=time_threshold,

+ frame_threshold=frame_threshold,

+ )

+

+

+def extract_faces(

+ img_path: Union[str, np.ndarray],

+ target_size: Tuple[int, int] = (224, 224),

+ detector_backend: str = "opencv",

+ enforce_detection: bool = True,

+ align: bool = True,

+ grayscale: bool = False,

+) -> List[Dict[str, Any]]:

+ """

+ This function applies pre-processing stages of a face recognition pipeline

+ including detection and alignment

+

+ Parameters:

+ img_path: exact image path, numpy array (BGR) or base64 encoded image.

+ Source image can have many face. Then, result will be the size of number

+ of faces appearing in that source image.

+

+ target_size (tuple): final shape of facial image. black pixels will be

+ added to resize the image.

+

+ detector_backend (string): face detection backends are retinaface, mtcnn,

+ opencv, ssd or dlib

+

+ enforce_detection (boolean): function throws exception if face cannot be

+ detected in the fed image. Set this to False if you do not want to get

+ an exception and run the function anyway.

+

+ align (boolean): alignment according to the eye positions.

+

+ grayscale (boolean): extracting faces in rgb or gray scale

+

+ Returns:

+ list of dictionaries. Each dictionary will have facial image itself,

+ extracted area from the original image and confidence score.

+

+ """

+

+ return detection.extract_faces(

+ img_path=img_path,

+ target_size=target_size,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ grayscale=grayscale,

+ )

+

+

+# ---------------------------

+# deprecated functions

+

+

+@deprecated(version="0.0.78", reason="Use DeepFace.extract_faces instead of DeepFace.detectFace")

+def detectFace(

+ img_path: Union[str, np.ndarray],

+ target_size: tuple = (224, 224),

+ detector_backend: str = "opencv",

+ enforce_detection: bool = True,

+ align: bool = True,

+) -> Union[np.ndarray, None]:

+ """

+ Deprecated function. Use extract_faces for same functionality.

+

+ This function applies pre-processing stages of a face recognition pipeline

+ including detection and alignment

+

+ Parameters:

+ img_path: exact image path, numpy array (BGR) or base64 encoded image.

+ Source image can have many face. Then, result will be the size of number

+ of faces appearing in that source image.

+

+ target_size (tuple): final shape of facial image. black pixels will be

+ added to resize the image.

+

+ detector_backend (string): face detection backends are retinaface, mtcnn,

+ opencv, ssd or dlib

+

+ enforce_detection (boolean): function throws exception if face cannot be

+ detected in the fed image. Set this to False if you do not want to get

+ an exception and run the function anyway.

+

+ align (boolean): alignment according to the eye positions.

+

+ grayscale (boolean): extracting faces in rgb or gray scale

+

+ Returns:

+ detected and aligned face as numpy array

+

+ """

+ logger.warn("Function detectFace is deprecated. Use extract_faces instead.")

+ face_objs = extract_faces(

+ img_path=img_path,

+ target_size=target_size,

+ detector_backend=detector_backend,

+ enforce_detection=enforce_detection,

+ align=align,

+ grayscale=False,

+ )

+

+ extracted_face = None

+ if len(face_objs) > 0:

+ extracted_face = face_objs[0]["face"]

+ return extracted_face

+

+

+# ---------------------------

+# main

+

+functions.initialize_folder()

+

+

+def cli() -> None:

+ """

+ command line interface function will be offered in this block

+ """

+ import fire

+

+ fire.Fire()

diff --git a/modules/deepface-master/deepface/__init__.py b/modules/deepface-master/deepface/__init__.py

new file mode 100644

index 000000000..e69de29bb

diff --git a/modules/deepface-master/deepface/basemodels/ArcFace.py b/modules/deepface-master/deepface/basemodels/ArcFace.py

new file mode 100644

index 000000000..b3059bda7

--- /dev/null

+++ b/modules/deepface-master/deepface/basemodels/ArcFace.py

@@ -0,0 +1,157 @@

+import os

+import gdown

+import tensorflow as tf

+from deepface.commons import functions

+from deepface.commons.logger import Logger

+

+logger = Logger(module="basemodels.ArcFace")

+

+# pylint: disable=unsubscriptable-object

+

+# --------------------------------

+# dependency configuration

+

+tf_version = int(tf.__version__.split(".", maxsplit=1)[0])

+

+if tf_version == 1:

+ from keras.models import Model

+ from keras.engine import training

+ from keras.layers import (

+ ZeroPadding2D,

+ Input,

+ Conv2D,

+ BatchNormalization,

+ PReLU,

+ Add,

+ Dropout,

+ Flatten,

+ Dense,

+ )

+else:

+ from tensorflow.keras.models import Model

+ from tensorflow.python.keras.engine import training

+ from tensorflow.keras.layers import (

+ ZeroPadding2D,

+ Input,

+ Conv2D,

+ BatchNormalization,

+ PReLU,

+ Add,

+ Dropout,

+ Flatten,

+ Dense,

+ )

+

+

+def loadModel(

+ url="https://github.com/serengil/deepface_models/releases/download/v1.0/arcface_weights.h5",

+) -> Model:

+ base_model = ResNet34()

+ inputs = base_model.inputs[0]

+ arcface_model = base_model.outputs[0]

+ arcface_model = BatchNormalization(momentum=0.9, epsilon=2e-5)(arcface_model)

+ arcface_model = Dropout(0.4)(arcface_model)

+ arcface_model = Flatten()(arcface_model)

+ arcface_model = Dense(512, activation=None, use_bias=True, kernel_initializer="glorot_normal")(

+ arcface_model

+ )

+ embedding = BatchNormalization(momentum=0.9, epsilon=2e-5, name="embedding", scale=True)(

+ arcface_model

+ )

+ model = Model(inputs, embedding, name=base_model.name)

+

+ # ---------------------------------------

+ # check the availability of pre-trained weights

+

+ home = functions.get_deepface_home()

+

+ file_name = "arcface_weights.h5"

+ output = home + "/.deepface/weights/" + file_name

+

+ if os.path.isfile(output) != True:

+

+ logger.info(f"{file_name} will be downloaded to {output}")

+ gdown.download(url, output, quiet=False)

+

+ # ---------------------------------------

+

+ model.load_weights(output)

+

+ return model

+

+

+def ResNet34() -> Model:

+

+ img_input = Input(shape=(112, 112, 3))

+

+ x = ZeroPadding2D(padding=1, name="conv1_pad")(img_input)

+ x = Conv2D(

+ 64, 3, strides=1, use_bias=False, kernel_initializer="glorot_normal", name="conv1_conv"

+ )(x)

+ x = BatchNormalization(axis=3, epsilon=2e-5, momentum=0.9, name="conv1_bn")(x)

+ x = PReLU(shared_axes=[1, 2], name="conv1_prelu")(x)

+ x = stack_fn(x)

+

+ model = training.Model(img_input, x, name="ResNet34")

+

+ return model

+

+

+def block1(x, filters, kernel_size=3, stride=1, conv_shortcut=True, name=None):

+ bn_axis = 3

+

+ if conv_shortcut:

+ shortcut = Conv2D(

+ filters,

+ 1,

+ strides=stride,

+ use_bias=False,

+ kernel_initializer="glorot_normal",

+ name=name + "_0_conv",

+ )(x)

+ shortcut = BatchNormalization(

+ axis=bn_axis, epsilon=2e-5, momentum=0.9, name=name + "_0_bn"

+ )(shortcut)

+ else:

+ shortcut = x

+

+ x = BatchNormalization(axis=bn_axis, epsilon=2e-5, momentum=0.9, name=name + "_1_bn")(x)

+ x = ZeroPadding2D(padding=1, name=name + "_1_pad")(x)

+ x = Conv2D(

+ filters,

+ 3,

+ strides=1,

+ kernel_initializer="glorot_normal",

+ use_bias=False,

+ name=name + "_1_conv",

+ )(x)

+ x = BatchNormalization(axis=bn_axis, epsilon=2e-5, momentum=0.9, name=name + "_2_bn")(x)

+ x = PReLU(shared_axes=[1, 2], name=name + "_1_prelu")(x)

+

+ x = ZeroPadding2D(padding=1, name=name + "_2_pad")(x)

+ x = Conv2D(

+ filters,

+ kernel_size,

+ strides=stride,

+ kernel_initializer="glorot_normal",

+ use_bias=False,

+ name=name + "_2_conv",

+ )(x)

+ x = BatchNormalization(axis=bn_axis, epsilon=2e-5, momentum=0.9, name=name + "_3_bn")(x)

+

+ x = Add(name=name + "_add")([shortcut, x])

+ return x

+

+

+def stack1(x, filters, blocks, stride1=2, name=None):

+ x = block1(x, filters, stride=stride1, name=name + "_block1")

+ for i in range(2, blocks + 1):

+ x = block1(x, filters, conv_shortcut=False, name=name + "_block" + str(i))

+ return x

+

+

+def stack_fn(x):

+ x = stack1(x, 64, 3, name="conv2")

+ x = stack1(x, 128, 4, name="conv3")

+ x = stack1(x, 256, 6, name="conv4")

+ return stack1(x, 512, 3, name="conv5")

diff --git a/modules/deepface-master/deepface/basemodels/DeepID.py b/modules/deepface-master/deepface/basemodels/DeepID.py

new file mode 100644

index 000000000..fa128b083

--- /dev/null

+++ b/modules/deepface-master/deepface/basemodels/DeepID.py

@@ -0,0 +1,84 @@

+import os

+import gdown

+import tensorflow as tf

+from deepface.commons import functions

+from deepface.commons.logger import Logger

+

+logger = Logger(module="basemodels.DeepID")

+

+tf_version = int(tf.__version__.split(".", maxsplit=1)[0])

+

+if tf_version == 1:

+ from keras.models import Model

+ from keras.layers import (

+ Conv2D,

+ Activation,

+ Input,

+ Add,

+ MaxPooling2D,

+ Flatten,

+ Dense,

+ Dropout,

+ )

+else:

+ from tensorflow.keras.models import Model

+ from tensorflow.keras.layers import (

+ Conv2D,

+ Activation,

+ Input,

+ Add,

+ MaxPooling2D,

+ Flatten,

+ Dense,

+ Dropout,

+ )

+

+# pylint: disable=line-too-long

+

+

+# -------------------------------------

+

+

+def loadModel(

+ url="https://github.com/serengil/deepface_models/releases/download/v1.0/deepid_keras_weights.h5",

+) -> Model:

+

+ myInput = Input(shape=(55, 47, 3))

+

+ x = Conv2D(20, (4, 4), name="Conv1", activation="relu", input_shape=(55, 47, 3))(myInput)

+ x = MaxPooling2D(pool_size=2, strides=2, name="Pool1")(x)

+ x = Dropout(rate=0.99, name="D1")(x)

+

+ x = Conv2D(40, (3, 3), name="Conv2", activation="relu")(x)

+ x = MaxPooling2D(pool_size=2, strides=2, name="Pool2")(x)

+ x = Dropout(rate=0.99, name="D2")(x)

+

+ x = Conv2D(60, (3, 3), name="Conv3", activation="relu")(x)

+ x = MaxPooling2D(pool_size=2, strides=2, name="Pool3")(x)

+ x = Dropout(rate=0.99, name="D3")(x)

+

+ x1 = Flatten()(x)

+ fc11 = Dense(160, name="fc11")(x1)

+

+ x2 = Conv2D(80, (2, 2), name="Conv4", activation="relu")(x)

+ x2 = Flatten()(x2)

+ fc12 = Dense(160, name="fc12")(x2)

+

+ y = Add()([fc11, fc12])

+ y = Activation("relu", name="deepid")(y)

+

+ model = Model(inputs=[myInput], outputs=y)

+

+ # ---------------------------------

+

+ home = functions.get_deepface_home()

+

+ if os.path.isfile(home + "/.deepface/weights/deepid_keras_weights.h5") != True:

+ logger.info("deepid_keras_weights.h5 will be downloaded...")

+

+ output = home + "/.deepface/weights/deepid_keras_weights.h5"

+ gdown.download(url, output, quiet=False)

+

+ model.load_weights(home + "/.deepface/weights/deepid_keras_weights.h5")

+

+ return model

diff --git a/modules/deepface-master/deepface/basemodels/DlibResNet.py b/modules/deepface-master/deepface/basemodels/DlibResNet.py

new file mode 100644

index 000000000..3cc57f10b

--- /dev/null

+++ b/modules/deepface-master/deepface/basemodels/DlibResNet.py

@@ -0,0 +1,86 @@

+import os

+import bz2

+import gdown

+import numpy as np

+from deepface.commons import functions

+from deepface.commons.logger import Logger

+

+logger = Logger(module="basemodels.DlibResNet")

+

+# pylint: disable=too-few-public-methods

+

+

+class DlibResNet:

+ def __init__(self):

+

+ ## this is not a must dependency. do not import it in the global level.

+ try:

+ import dlib

+ except ModuleNotFoundError as e:

+ raise ImportError(

+ "Dlib is an optional dependency, ensure the library is installed."

+ "Please install using 'pip install dlib' "

+ ) from e

+

+ self.layers = [DlibMetaData()]

+

+ # ---------------------

+

+ home = functions.get_deepface_home()

+ weight_file = home + "/.deepface/weights/dlib_face_recognition_resnet_model_v1.dat"

+

+ # ---------------------

+

+ # download pre-trained model if it does not exist

+ if os.path.isfile(weight_file) != True:

+ logger.info("dlib_face_recognition_resnet_model_v1.dat is going to be downloaded")

+

+ file_name = "dlib_face_recognition_resnet_model_v1.dat.bz2"

+ url = f"http://dlib.net/files/{file_name}"

+ output = f"{home}/.deepface/weights/{file_name}"

+ gdown.download(url, output, quiet=False)

+

+ zipfile = bz2.BZ2File(output)

+ data = zipfile.read()

+ newfilepath = output[:-4] # discard .bz2 extension

+ with open(newfilepath, "wb") as f:

+ f.write(data)

+

+ # ---------------------

+

+ model = dlib.face_recognition_model_v1(weight_file)

+ self.__model = model

+

+ # ---------------------

+

+ # return None # classes must return None

+

+ def predict(self, img_aligned: np.ndarray) -> np.ndarray:

+

+ # functions.detectFace returns 4 dimensional images

+ if len(img_aligned.shape) == 4:

+ img_aligned = img_aligned[0]

+

+ # functions.detectFace returns bgr images

+ img_aligned = img_aligned[:, :, ::-1] # bgr to rgb

+

+ # deepface.detectFace returns an array in scale of [0, 1]

+ # but dlib expects in scale of [0, 255]

+ if img_aligned.max() <= 1:

+ img_aligned = img_aligned * 255

+

+ img_aligned = img_aligned.astype(np.uint8)

+

+ model = self.__model

+

+ img_representation = model.compute_face_descriptor(img_aligned)

+

+ img_representation = np.array(img_representation)

+ img_representation = np.expand_dims(img_representation, axis=0)

+

+ return img_representation

+

+

+class DlibMetaData:

+ def __init__(self):

+ self.input_shape = [[1, 150, 150, 3]]

diff --git a/modules/deepface-master/deepface/basemodels/DlibWrapper.py b/modules/deepface-master/deepface/basemodels/DlibWrapper.py

new file mode 100644

index 000000000..51f0a0b98

--- /dev/null

+++ b/modules/deepface-master/deepface/basemodels/DlibWrapper.py

@@ -0,0 +1,6 @@

+from typing import Any

+from deepface.basemodels.DlibResNet import DlibResNet

+

+

+def loadModel() -> Any:

+ return DlibResNet()

diff --git a/modules/deepface-master/deepface/basemodels/Facenet.py b/modules/deepface-master/deepface/basemodels/Facenet.py

new file mode 100644

index 000000000..1ad4f8791

--- /dev/null

+++ b/modules/deepface-master/deepface/basemodels/Facenet.py

@@ -0,0 +1,1642 @@

+import os

+import gdown

+import tensorflow as tf

+from deepface.commons import functions

+from deepface.commons.logger import Logger

+

+logger = Logger(module="basemodels.Facenet")

+

+# --------------------------------

+# dependency configuration

+

+tf_version = int(tf.__version__.split(".", maxsplit=1)[0])

+

+if tf_version == 1:

+ from keras.models import Model

+ from keras.layers import Activation

+ from keras.layers import BatchNormalization

+ from keras.layers import Concatenate

+ from keras.layers import Conv2D

+ from keras.layers import Dense

+ from keras.layers import Dropout

+ from keras.layers import GlobalAveragePooling2D

+ from keras.layers import Input

+ from keras.layers import Lambda

+ from keras.layers import MaxPooling2D

+ from keras.layers import add

+ from keras import backend as K

+else:

+ from tensorflow.keras.models import Model

+ from tensorflow.keras.layers import Activation

+ from tensorflow.keras.layers import BatchNormalization

+ from tensorflow.keras.layers import Concatenate

+ from tensorflow.keras.layers import Conv2D

+ from tensorflow.keras.layers import Dense

+ from tensorflow.keras.layers import Dropout

+ from tensorflow.keras.layers import GlobalAveragePooling2D

+ from tensorflow.keras.layers import Input

+ from tensorflow.keras.layers import Lambda

+ from tensorflow.keras.layers import MaxPooling2D

+ from tensorflow.keras.layers import add

+ from tensorflow.keras import backend as K

+

+# --------------------------------

+

+

+def scaling(x, scale):

+ return x * scale

+

+

+def InceptionResNetV2(dimension=128) -> Model:

+

+ inputs = Input(shape=(160, 160, 3))

+ x = Conv2D(32, 3, strides=2, padding="valid", use_bias=False, name="Conv2d_1a_3x3")(inputs)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_1a_3x3_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_1a_3x3_Activation")(x)

+ x = Conv2D(32, 3, strides=1, padding="valid", use_bias=False, name="Conv2d_2a_3x3")(x)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_2a_3x3_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_2a_3x3_Activation")(x)

+ x = Conv2D(64, 3, strides=1, padding="same", use_bias=False, name="Conv2d_2b_3x3")(x)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_2b_3x3_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_2b_3x3_Activation")(x)

+ x = MaxPooling2D(3, strides=2, name="MaxPool_3a_3x3")(x)

+ x = Conv2D(80, 1, strides=1, padding="valid", use_bias=False, name="Conv2d_3b_1x1")(x)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_3b_1x1_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_3b_1x1_Activation")(x)

+ x = Conv2D(192, 3, strides=1, padding="valid", use_bias=False, name="Conv2d_4a_3x3")(x)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_4a_3x3_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_4a_3x3_Activation")(x)

+ x = Conv2D(256, 3, strides=2, padding="valid", use_bias=False, name="Conv2d_4b_3x3")(x)

+ x = BatchNormalization(

+ axis=3, momentum=0.995, epsilon=0.001, scale=False, name="Conv2d_4b_3x3_BatchNorm"

+ )(x)

+ x = Activation("relu", name="Conv2d_4b_3x3_Activation")(x)

+

+ # 5x Block35 (Inception-ResNet-A block):

+ branch_0 = Conv2D(

+ 32, 1, strides=1, padding="same", use_bias=False, name="Block35_1_Branch_0_Conv2d_1x1"

+ )(x)

+ branch_0 = BatchNormalization(

+ axis=3,

+ momentum=0.995,

+ epsilon=0.001,

+ scale=False,

+ name="Block35_1_Branch_0_Conv2d_1x1_BatchNorm",

+ )(branch_0)

+ branch_0 = Activation("relu", name="Block35_1_Branch_0_Conv2d_1x1_Activation")(branch_0)

+ branch_1 = Conv2D(

+ 32, 1, strides=1, padding="same", use_bias=False, name="Block35_1_Branch_1_Conv2d_0a_1x1"

+ )(x)

+ branch_1 = BatchNormalization(

+ axis=3,

+ momentum=0.995,

+ epsilon=0.001,

+ scale=False,

+ name="Block35_1_Branch_1_Conv2d_0a_1x1_BatchNorm",

+ )(branch_1)

+ branch_1 = Activation("relu", name="Block35_1_Branch_1_Conv2d_0a_1x1_Activation")(branch_1)

+ branch_1 = Conv2D(

+ 32, 3, strides=1, padding="same", use_bias=False, name="Block35_1_Branch_1_Conv2d_0b_3x3"

+ )(branch_1)

+ branch_1 = BatchNormalization(

+ axis=3,

+ momentum=0.995,

+ epsilon=0.001,

+ scale=False,

+ name="Block35_1_Branch_1_Conv2d_0b_3x3_BatchNorm",

+ )(branch_1)